Value Iteration algorithm

Value Iteration is different from policy iteration . It updates the value function using the Bellman Optimality Equation...

Given an understanding of policy iteration and truncated policy iteration, how does value iteration work? Can you first outline the algorithm step by step, differentiate it from policy iteration, and then provide a comprehensive example?🔗

Certainly! Let's dive deep into Value Iteration.

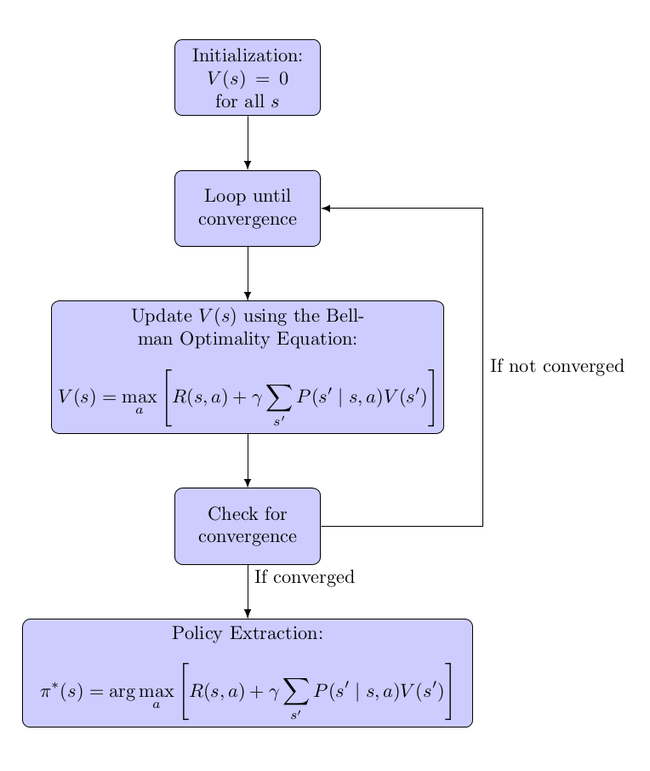

Value Iteration Algorithm:🔗

1. Initialization:🔗

- Start with an arbitrary value function for all states , often initialized to zeros.

2. Loop until convergence:🔗

- For each state :

- Update using the Bellman Optimality Equation:

- Update using the Bellman Optimality Equation:

- Repeat this process until the changes in the value function across all states are below a certain threshold, indicating convergence.

3. Policy Extraction:🔗

- Once the value function has converged, for each state :

- Extract the optimal policy by selecting the action that maximizes the expected value:

- Extract the optimal policy by selecting the action that maximizes the expected value:

Difference from Policy Iteration:🔗

- Policy Iteration alternates between two distinct steps: Policy Evaluation (where the value function for a given policy is computed to convergence) and Policy Improvement (where the policy is updated based on the value function).

- Value Iteration compresses these two steps into one. It updates the value function using the Bellman Optimality Equation, and this process implicitly defines the policy at each step. Only after the value function converges is the optimal policy explicitly extracted.

While Policy Iteration fully evaluates a policy before improving it, Value Iteration constantly improves its value estimates and implicitly its policy in each iteration.

Example: Simple Maze Navigation:🔗

Imagine a robot in a 3x3 maze:

- S: Start.

- G: Goal with a reward of +10.

- X: An obstacle.

- -: Open cells with a reward of -1 (a penalty to encourage the robot to find the shortest path).

- Actions: Up, Down, Left, Right. If an action would move the robot off the grid or into the obstacle, the robot stays in the current position.

Value Iteration Process:🔗

-

Initialization: All cell values are set to 0.

-

Iteration: For the cell labeled S:

- Up or Left: (robot stays in place)

- Right: (moving to the open cell)

- Down:

The value remains 0 as all actions have the same value.

For the cell next to G (bottom-center):

- Best move is Right towards G:

After several iterations, the value of each cell will reflect the maximum expected reward from that position.

-

Policy Extraction: The robot will choose actions based on the maximum expected value of adjacent cells. For instance, in the cell next to G, the best action is to move right, towards G.

With Value Iteration, the robot iteratively refines its value estimates for each cell in the maze until they converge. Once converged, the robot can then determine the optimal action in each cell to reach the goal efficiently. The primary difference from Policy Iteration is that the robot doesn't work with an explicit policy during the iteration process, only extracting it at the end.

COMING SOON ! ! !

Till Then, you can Subscribe to Us.

Get the latest updates, exclusive content and special offers delivered directly to your mailbox. Subscribe now!